Power Up Network Throughput with mtu_bypass

AIX Virtual Ethernet Adapters have a setting called mtu_bypass - This allows the AIX networking stack to combine many smaller TCP segments in to one larger 64Kb segment for sending to the VIO Servers SEA Adapter and the SEA Adapter can send up to 64Kb segments back to the LPAR. Once the packets have been sent from the SEA Adapter to the physical adapter, the larger 64Kb segments are re-segmented to the size (mtu/jumbo_size) specified at the adapter level before being passed to the network switch.

For applications that send or receive large amounts of network data, this can reduce the number of network packets being sent and received, and most importantly, can reduce the number of CPU cycles needed to process network traffic. This frees up CPU cores on the VIO Servers and client LPARs for applications or other LPARs to use.

From my testing I have seen a 25% reduction in cores being consumed by the LPARs and VIO Servers after switching on mtu_bypass for large data sending and receiving. The mtu_bypass options does not appear to affect applications that send and/or receive large amounts of small (700 bytes or less) data packets, with results for this type of workload staying consistent with mtu_bypass turned on and off.

For reference, here are the LPAR and VIO Server Configurations.

|

OS Level |

7.2.0.1 |

7.2.0.1 |

2.2.4.21 |

2.2.4.21 |

|

System |

9119-MHE |

9119-MHE |

9119-MHE |

9119-MHE |

|

Processor Frequency |

4024 |

4024 |

4024 |

4024 |

|

Entitled Capacity |

2.00 |

2.00 |

2.00 |

2.00 |

|

Virtual CPU |

2 |

2 |

2 |

2 |

|

Mode |

uncapped |

uncapped |

dedicated donating |

dedicated donating |

|

Memory |

16384 |

16384 |

12288 |

12288 |

These are the adapter settings for the VIO Server SEA's.

lsdev -dev ent30 -attr

adapter_reset no

ha_mode sharing

jumbo_frames yes

large_receive yes

largesend 1

queue_size 8192

real_adapter ent33

Real network adapter ent33 settings.

These are the settings and adapter type. For these tests, both VIO Servers had a 10Gb SRIOV Port from seperate SRIOV adapters. This is the physical adapter type tested: PCIe2 10GbE SFP+ SR 4-port Converged Network Adapter.

jumbo_frames yes

jumbo_size 9014

large_receive yes

large_send yes

media_speed 10000_Full_Duplex

For the Client LPARs.

Adapter mtu_bypass was switch off or on for each specific test and we increase the default number of pre-allocated Receive Buffers for the ent device. Changing the mtu_bypass option is dynamic and take immediate effect. Use chdev -l en0 -a mtu_bypass=on (or off). There is no need to change the en device MTU size.

lsattr -El ent0

mtu 1500

mtu_bypass off <=== This was changed to on for the mtu_bypass on tests.

For the Client LPARs.

The default settings for network adapter buffers in AIX 7.2 are the same but the buf_mode attribute default has changed to min_max. This increases the default number of min_buf_* to be the same as the max_buf_* attribute. See my blog post for details. Note for the VIO Servers, I increase these values to match the client LPAR.

entstat -d ent0

Receive Information

Receive Buffers

Buffer Type Tiny Small Medium Large Huge

Min Buffers 2048 2048 256 64 64

Max Buffers 2048 2048 256 64 64

Allocated 2048 2048 256 64 64

Registered 2048 2048 256 64 64

History

Max Allocated 2048 2048 256 64 64

Lowest Registered 2047 1996 128 32 56

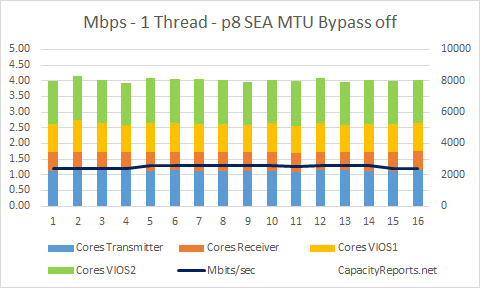

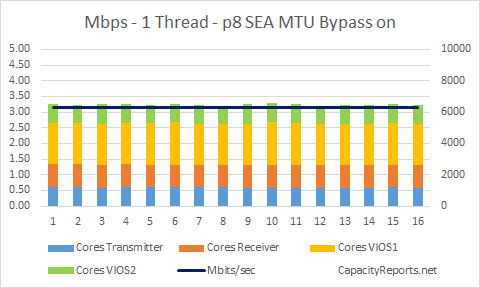

1 Thread Network Bandwidth Tests

The first tests were done with a single network thread, with mtu_bypass off we needed 4.02 cores to acheive 2,500Mbps, whereas with mtu_bypass on, we needed 3.22 cores to acheive 6,200 Mbps. So the core reduction is 20% and the throughput increased by 140%.

1 Thread Test - mtu_bypass off

Client command: /usr/bin/iperf -c 192.168.30.181 -fm -P1 -l1M -t60

1 Thread Test - mtu_bypass on

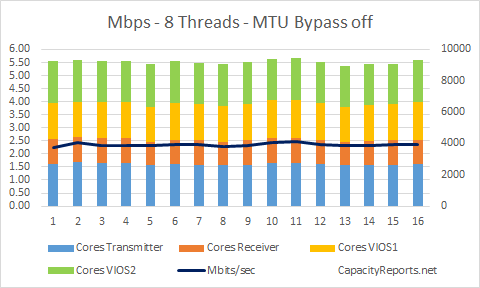

8 Threads Network Bandwidth Tests

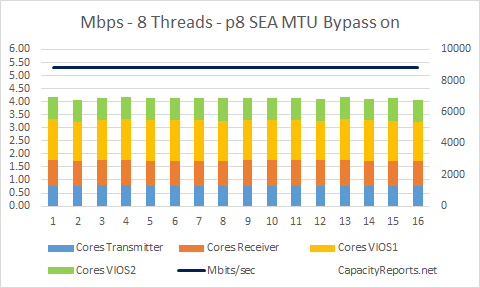

The second tests were done with eight network threads, with mtu_bypass off we needed 5.52 cores to acheive 3,900Mbps, whereas with mtu_bypass on, we needed 4.13 cores to acheive 8,800 Mbps. So the core reduction is 25% and the throughput increased by 124%.

8 Threads Test - mtu_bypass off

/usr/bin/iperf -c 192.168.10.181 -fm -P8 -l1M -t60

8 Threads Test - mtu_bypass on

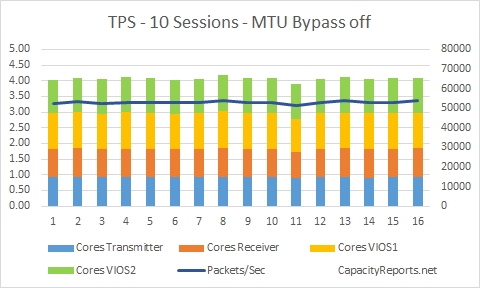

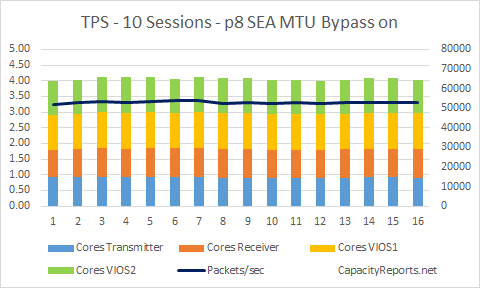

10 Client Sessions, Maximum TPS Tests

The third tests were done with 10 Session generating the maximum number of tranactions per second (TPS). With mtu_bypass off and mtu_bypass on we needed 4.06 cores to acheive 52,000 TPS. So for applications that send and receive many small data packets (700 bytes or less), there is no performance degradation by switching mtu_bypass on. You acheive all the gains for larger data packets (backups and restores for example), and no penatlies for your smaller application data.

10 Sessions, Maximum TPS - mtu_bypass off

/usr/bin/netperf -H 192.168.10.181 -l60 -v10 -t TCP_RR -- -r 700 -D

10 Sessions, Maximum TPS - mtu_bypass on

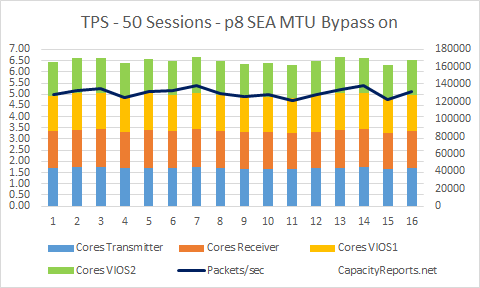

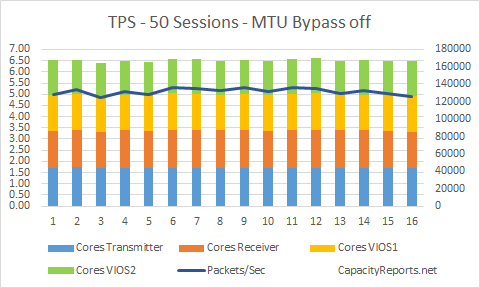

50 Client Sessions, Maximum TPS Tests

The fourth tests were done with 50 Session generating the maximum number of tranactions per second (TPS). With mtu_bypass off and mtu_bypass on we needed 6.50 cores to acheive 131,000 TPS. So for applications that send and receive many small data packets (700 bytes or less), there is no performance degradation by switching mtu_bypass on. You acheive all the gains for larger data packets (backups and restores for example), and no penatlies for your smaller application data.

50 Sessions, Maximum TPS - mtu_bypass off

/usr/bin/netperf -H 192.168.10.181 -l60 -v50 -t TCP_RR -- -r 700 -D

50 Sessions, Maximum TPS - mtu_bypass on