Power8 E880 Internal vSwitch Tests

These are the testing results for my internal vSwitch and external switch network performance tests.

In these tests, I have only done some basic tuning to the network adapters and settings, as I am demonstrating the differences in bandwidth between cores, chips and nodes when using the internal vSwitch, not attempting to get the fastest bandwidth.

For reference, here are the LPAR and VIO Server Configurations.

|

OS Level |

7.2.0.1 |

7.2.0.1 |

2.2.4.21 |

2.2.4.21 |

|

System |

9119-MHE |

9119-MHE |

9119-MHE |

9119-MHE |

|

Processor Frequency |

4024 |

4024 |

4024 |

4024 |

|

Entitled Capacity |

2.00 |

2.00 |

2.00 |

2.00 |

|

Virtual CPU |

2 |

2 |

2 |

2 |

|

Mode |

uncapped |

uncapped |

dedicated donating |

dedicated donating |

|

Memory |

16384 |

16384 |

12288 |

12288 |

Note: LPARs were running SMT4.

These are the adapter settings for the VIO Server 1Gbit SEA's.

lsdev -dev ent42 -attr

adapter_reset no

ha_mode sharing

jumbo_frames no

large_receive yes

largesend 1

queue_size 8192

real_adapter ent38

Real 1Gbit network adapter ent38 settings.

These are the settings and adapter type. For these tests, both VIO Servers had a 1Gb SRIOV Port from seperate SRIOV adapters. This is the physical adapter type tested: PCIe2 100/1000 Base-TX 4-port Converged Network Adapter.

jumbo_frames no

large_receive yes

large_send yes

Physical Port Speed: 1Gbps Full Duplex

These are the adapter settings for the VIO Server 10Gbit SEA's.

lsdev -dev ent30 -attr

adapter_reset no

ha_mode sharing

jumbo_frames yes

large_receive yes

largesend 1

queue_size 8192

real_adapter ent33

Real 10Gbit network adapter ent33 settings.

These are the settings and adapter type. For these tests, both VIO Servers had a 10Gb SRIOV Port from seperate SRIOV adapters. This is the physical adapter type tested: PCIe2 10GbE SFP+ SR 4-port Converged Network Adapter.

jumbo_frames yes

jumbo_size 9014

large_receive yes

large_send yes

media_speed 10000_Full_Duplex

For the Client LPARs.

Adapter mtu_bypass was switched on for each test and we increase the default number of pre-allocated Receive Buffers for the ent device. Changing the mtu_bypass option is dynamic and take immediate effect. Use chdev -l en0 -a mtu_bypass=on (or off). There is no need to change the en device MTU size.

lsattr -El ent0

mtu 1500

mtu_bypass on

For the Client LPARs.

The default settings for network adapter buffers in AIX 7.2 are the same but the buf_mode attribute default has changed to min_max. This increases the default number of min_buf_* to be the same as the max_buf_* attribute. See my blog post for details. Note for the VIO Servers, I increase these values to match the client LPAR.

entstat -d ent0

Receive Information

Receive Buffers

Buffer Type Tiny Small Medium Large Huge

Min Buffers 2048 2048 256 64 64

Max Buffers 2048 2048 256 64 64

Allocated 2048 2048 256 64 64

Registered 2048 2048 256 64 64

History

Max Allocated 2048 2048 256 64 64

Lowest Registered 2047 1996 128 32 56

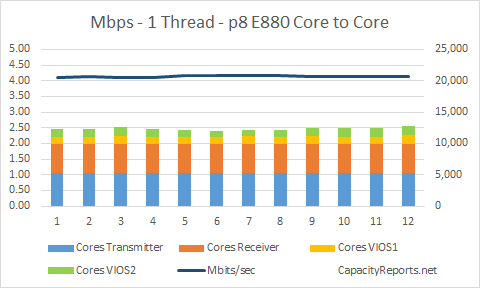

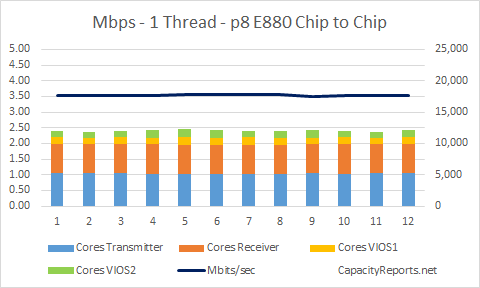

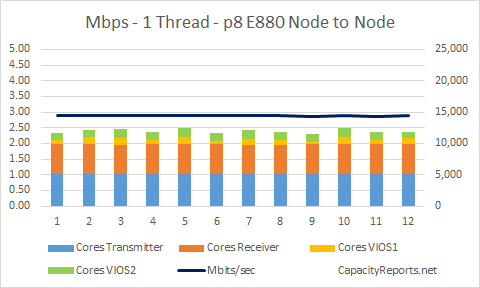

1 Thread iperf command: /usr/bin/iperf -c 192.168.30.181 -fm -P1 -l1M -t60

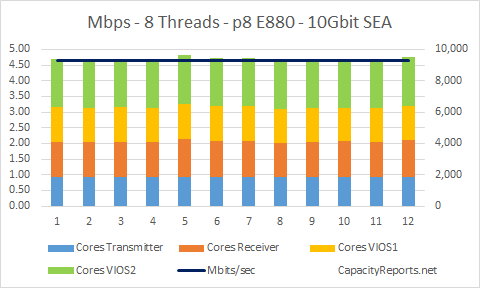

8 Threads iperf command: /usr/bin/iperf -c 192.168.30.181 -fm -P8 -l1M -t60

1 Thread Test - Core to Core - Same Chip

1 Thread Test - Chip to Chip - Same Node

1 Thread Test - Node to Node

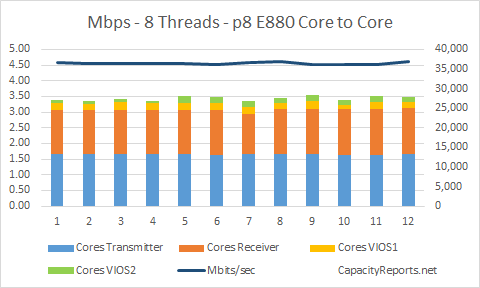

8 Threads Test - Core to Core - Same Chip

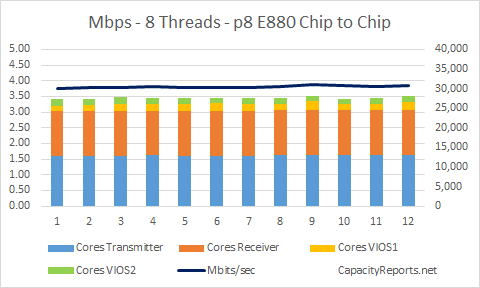

8 Threads Test - Chip to Chip - Same Node

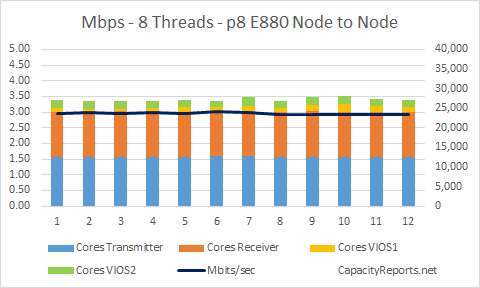

8 Threads Test - Node to Node

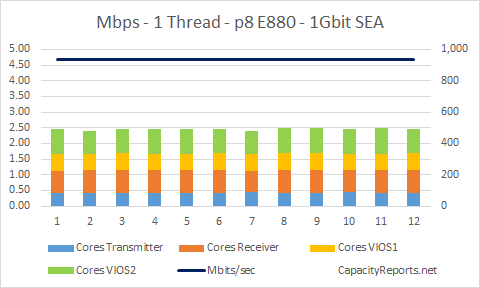

1 Thread Test - SEA and 1Gbit Network

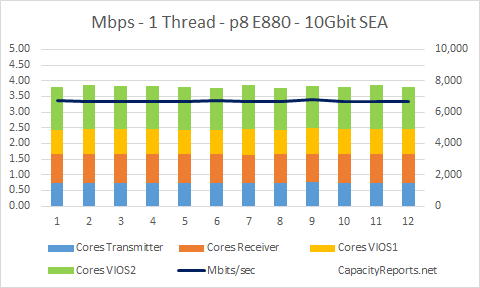

1 Thread Test - SEA and 10Gbit Network

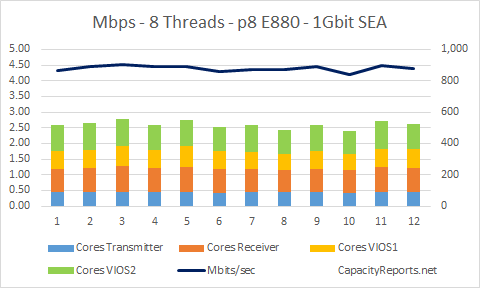

8 Threads Test - SEA and 1Gbit Network

8 Threads Test - SEA and 10Gbit Network