vNIC Performance Testing with No VIOS SEA Adapter

Here are the results of my vNIC performance testing. These tests were conducted on a 9119 MHE (E880). No other workloads were running at the time of the testing so these results should provide a very good indication of achievable throughput when using vNIC.

We have the EN0J PCIe3 4-Port 10Gb FCoE & 1GbE Cards. Each test was run eight times to get a good average set of results.

For these tests, the VIO Servers did not have a SEA adapter configured, so these tests show the VIO Server CPU load from just the vnicserver_crq threads. I conducted exactly the same tests with the VIO Server having SEA adapters to show the extra VIO Server CPU utilisation for bridging the SEA traffic.

For reference, here are my LPAR and VIO Server Configurations.

|

OS Level |

7.2.0.1 |

7.2.0.1 |

2.2.4.21 |

2.2.4.21 |

|

System |

9119-MHE |

9119-MHE |

9119-MHE |

9119-MHE |

|

Processor Frequency |

4024 |

4024 |

4024 |

4024 |

|

Entitled Capacity |

2.00 |

2.00 |

2.00 |

2.00 |

|

Virtual CPU |

2 |

2 |

2 |

2 |

|

Mode |

uncapped |

uncapped |

dedicated donating |

dedicated donating |

|

Memory |

16384 |

16384 |

12288 |

12288 |

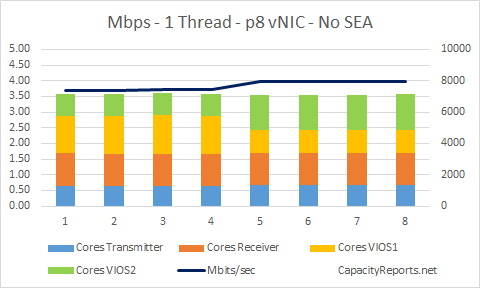

1 Thread Test

Client command: /usr/bin/iperf -c 192.168.30.181 -fm -P1 -l1M -t60

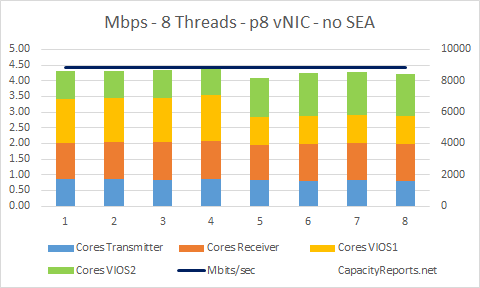

8 Threads Test

Client command: /usr/bin/iperf -c 192.168.30.181 -fm -P8 -l1M -t60

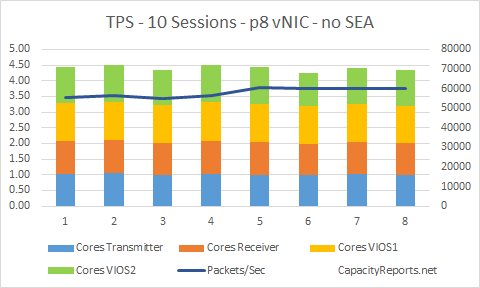

10 Sessions Maximum Transactions per Second

Client command: /usr/bin/netperf -H 192.168.30.181 -l60 -v50 -t TCP_RR -- -r 700 -D

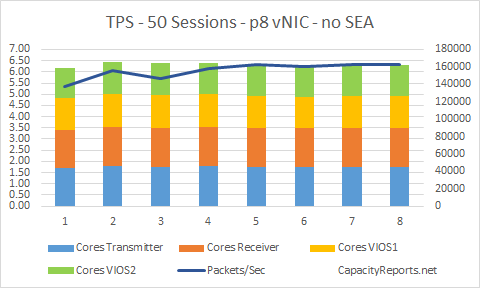

50 Sessions Maximum Transactions per Second

Client command: /usr/bin/netperf -H 192.168.30.181 -l60 -v50 -t TCP_RR -- -r 700 -D